Anyscale Endpoints

Overview

Anyscale Endpoints

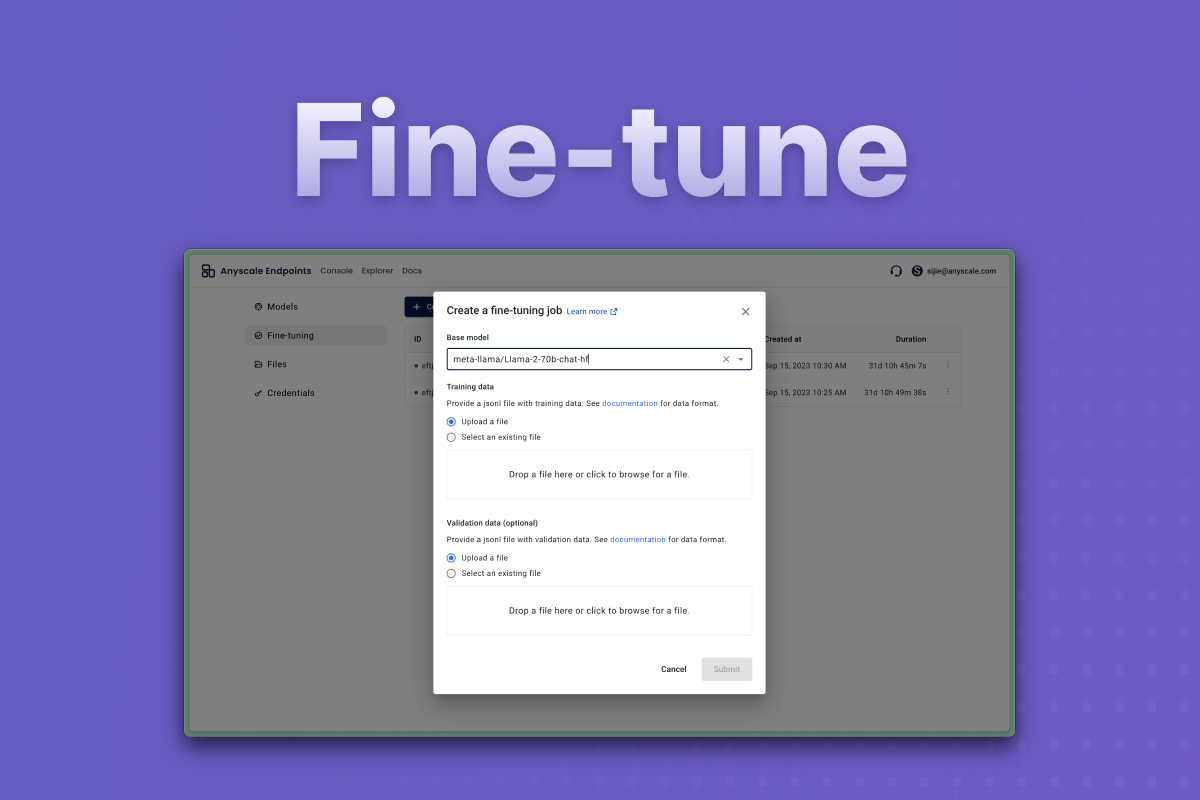

This integration makes it easy to query Large Language Models or other Generative AI models with Anyscale Endpoints. Sign up with Anyscale Endpoints and use our API to query from a selection of available models.

You will be charged per token inputted and token generated by the model. You can view your billing and usage here.

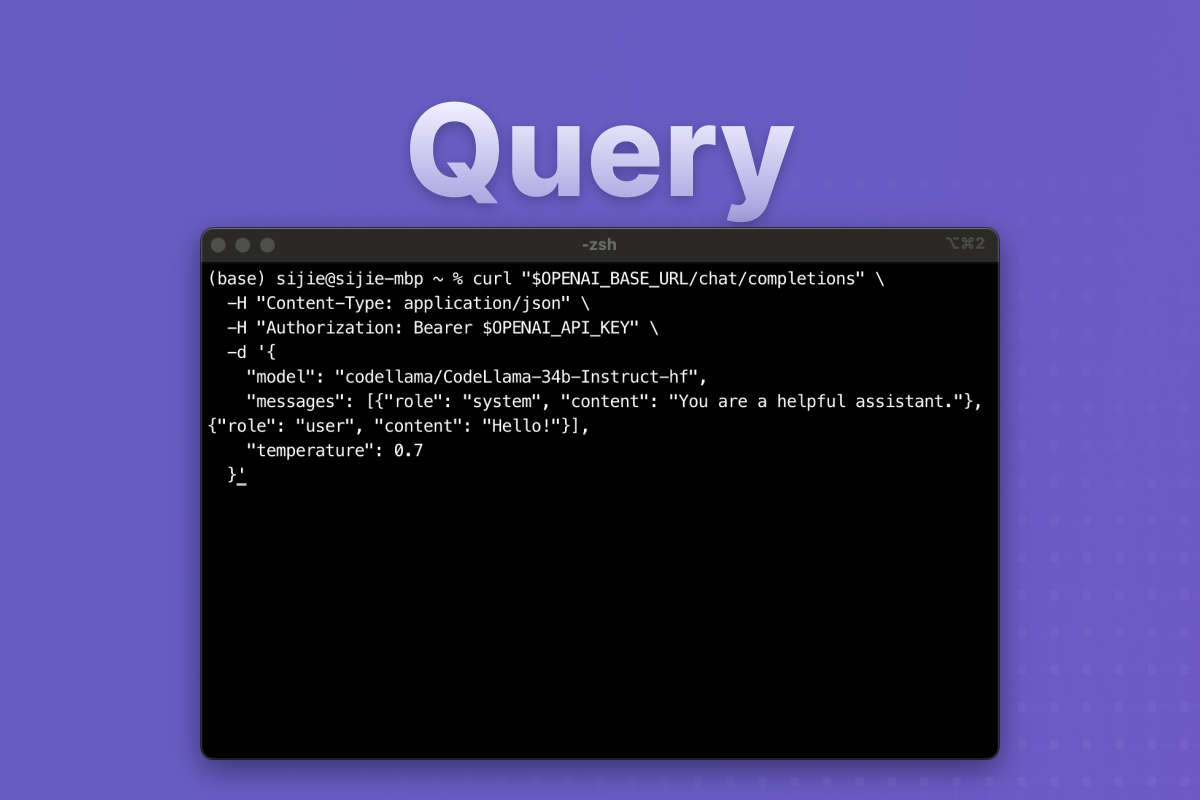

After installing this integration, you can import the OpenAI SDK into your application and start querying our available models. For more info, use our query a model guide or visit the docs.

Additional Information

Anyscale Endpoints is compatible with the OpenAI SDK. We utilize OpenAI environment variables to control the SDK behavior to call the Anyscale Endpoints backend. This integration injects these environment variables into your projects so you can just import the OpenAI SDK and start querying LLMs.

Because we share environment variables with OpenAI, some changes must be done if you wish to query both OpenAI and Anyscale Endpoint's backend. You can follow the guide here.

Environment variables

OPENAI_BASE_URL: Sets the OpenAI SDK to use Anyscale as a backendOPENAI_API_KEY: A secret token used to authenticate your requests to Anyscale Endpoints. This token can be managed in the Anyscale Endpoints credentials page. The token will expire after 2 years but you can rotate it in the environment variables settings page for your project.